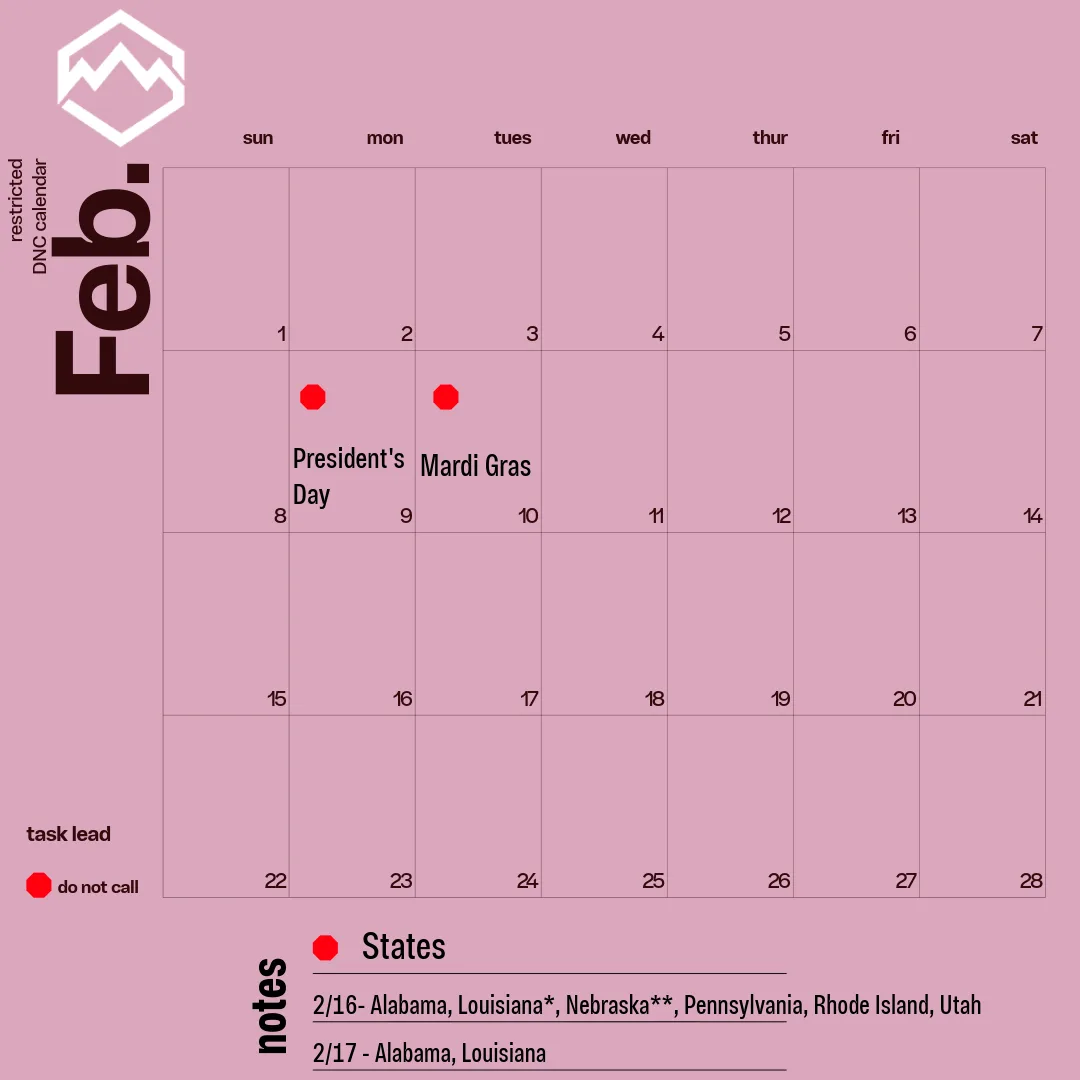

February 2026 Restricted Call Dates for Insurance Agencies

February 2026 Restricted Call Dates For insurance agencies and brokers, outbound calling remains a critical channel for lead follow-up, policy reviews, renewals, and cross-sell opportunities. It’s important to remember that not all days are equal when it comes to compliance. February 2026 Restricted Calling Dates (U.S. Only) February 16, 2026 – President’s Day Alabama Louisiana* […]